The Complete Guide to AI SEO Optimization for LLM Search

Your page ranks, but the answer at the top is generated by an AI and cites someone else. Painful, right? This is the new reality of ai search engines. They retrieve, reason, and synthesize answers from a small set of sources. If your content is not in that set, your ai search visibility suffers. This guide shows you exactly how to win that inclusion. You’ll get a practical system for AI SEO Optimization for LLM Search, so your best pages get retrieved, cited, and recommended in answer engines.

Posted by

Related reading

The Ultimate Guide to AI Powered SEO Content Strategy

Picture this. It’s Monday, 8:30 a.m., coffee in hand. You’re juggling 1,200 keywords in a spreadsheet, opening 11 tabs to compare SERPs, building briefs by hand, and begging teammates for internal link suggestions. By noon, you’ve written… a title. Now flip it. You upload those queries into an AI-orchestrated workflow. It clusters them by search intent, drafts a brief with entities to cover, surfaces internal link opportunities, and flags gaps against competitors. By noon, you’re editing a strong draft and planning the next cluster.

How to Choose the Best AI SEO Tools for Your Content Workflow

Atlas Content had the right people. Smart strategists, solid writers, a reliable dev. But their SEO workflow was stuck in the past. They were juggling keyword research in spreadsheets, copying SERP data into briefs, and manually grading content against competitors. Technical checks lived in a separate crawler export. Monthly reporting took three days and stole time from strategy. Sound familiar?

Streamlining Your SEO Workflow with All-in-One AI Tools

You know the drill. Five tabs open for keyword research, a spreadsheet for mapping topics, a separate crawler, a content editor plugin, and a reporting deck that takes half a day to update. By Friday, you’ve spent more time stitching tools together than actually moving rankings.

Table of Contents

- Introduction

- Understanding LLM Search and AI SEO: The New Search Paradigm

- Core Principles of AI SEO Optimization for LLM Search

- Actionable Framework: Step-by-Step LLM Search Optimization

- Technical Tactics: On-Page and Off-Page AI SEO for LLM Search

- Agentic AI and the Future of Search: Trends and Opportunities

- Practical Examples: Real-World LLM SEO Optimization in Action

- The Complete LLM SEO Optimization Checklist

- Conclusion: Future-Proof Your SEO for the Age of AI Search

- Introduction

- Understanding LLM Search and AI SEO: The New Search Paradigm

- Core Principles of AI SEO Optimization for LLM Search

- Actionable Framework: Step-by-Step LLM Search Optimization

- Quick Answer: How do you optimize for LLM search?

- Technical Tactics: On-Page and Off-Page AI SEO for LLM Search

- Agentic AI and the Future of Search: Trends and Opportunities

- Practical Examples: Real-World LLM SEO Optimization in Action

- The Complete LLM SEO Optimization Checklist

- Conclusion: Future-Proof Your SEO for the Age of AI Search

- Key Takeaways

Introduction

Your page ranks, but the answer at the top is generated by an AI and cites someone else. Painful, right?

This is the new reality of ai search engines. They retrieve, reason, and synthesize answers from a small set of sources. If your content is not in that set, your ai search visibility suffers.

This guide shows you exactly how to win that inclusion. You’ll get a practical system for AI SEO Optimization for LLM Search, so your best pages get retrieved, cited, and recommended in answer engines.

We’ll keep it clear and tactical. Short steps, real examples, and code where it helps.

Understanding LLM Search and AI SEO: The New Search Paradigm

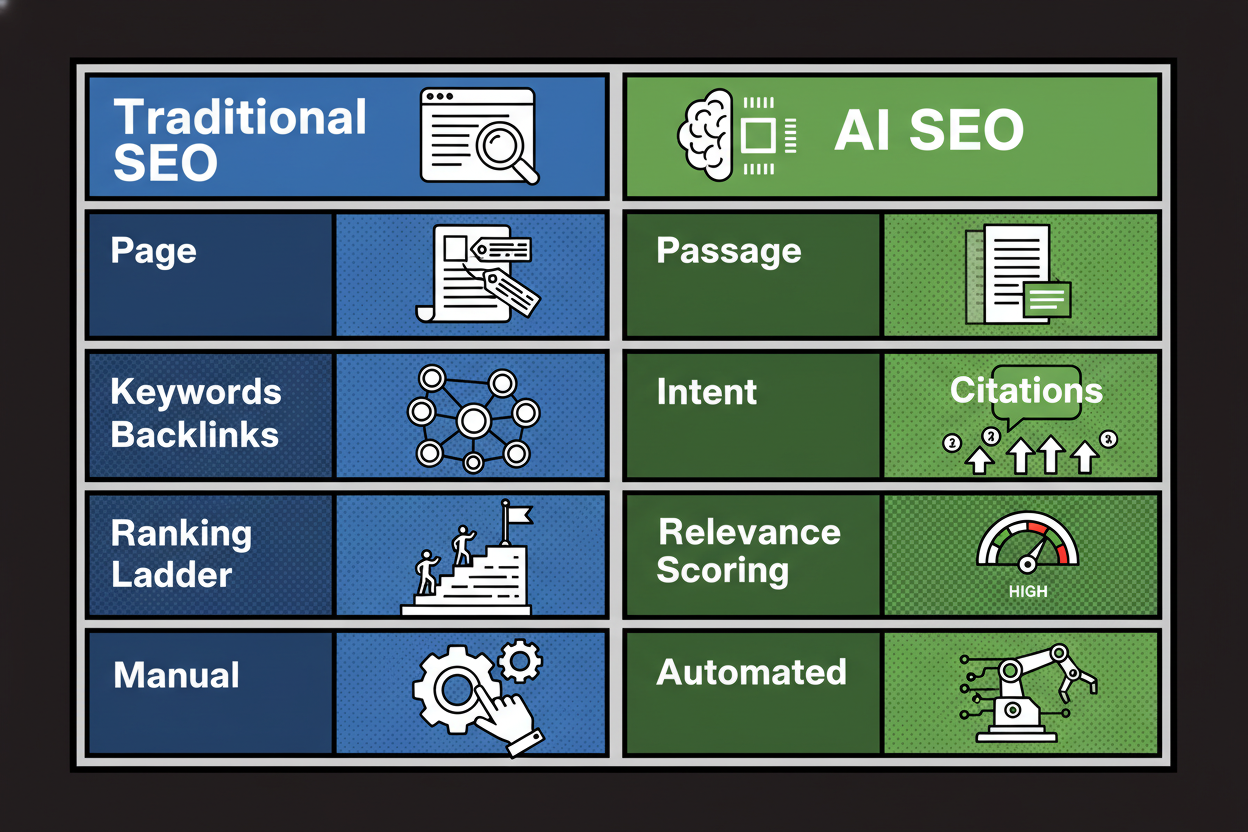

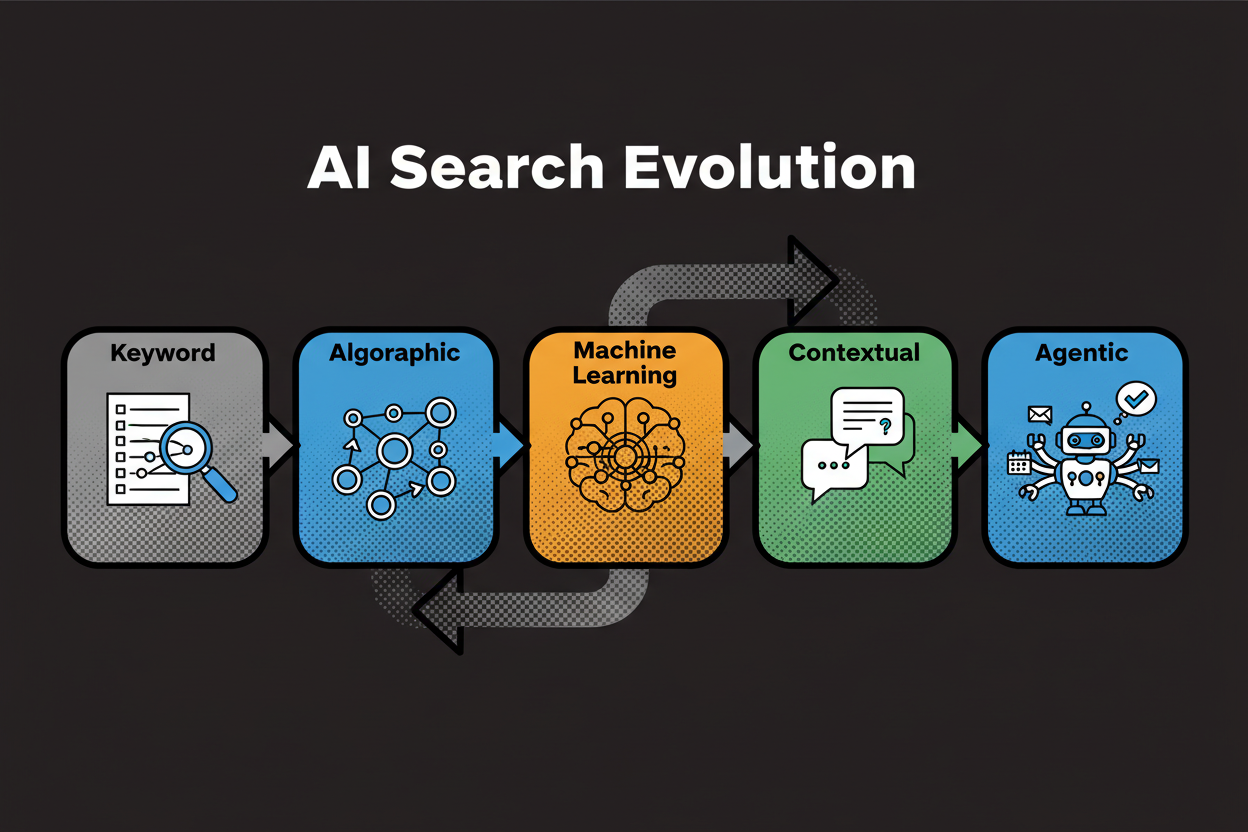

Traditional search ranked links. You clicked, then pieced together an answer yourself.

LLM search flips that flow. The engine composes the answer first, then shows a handful of citations you can verify.

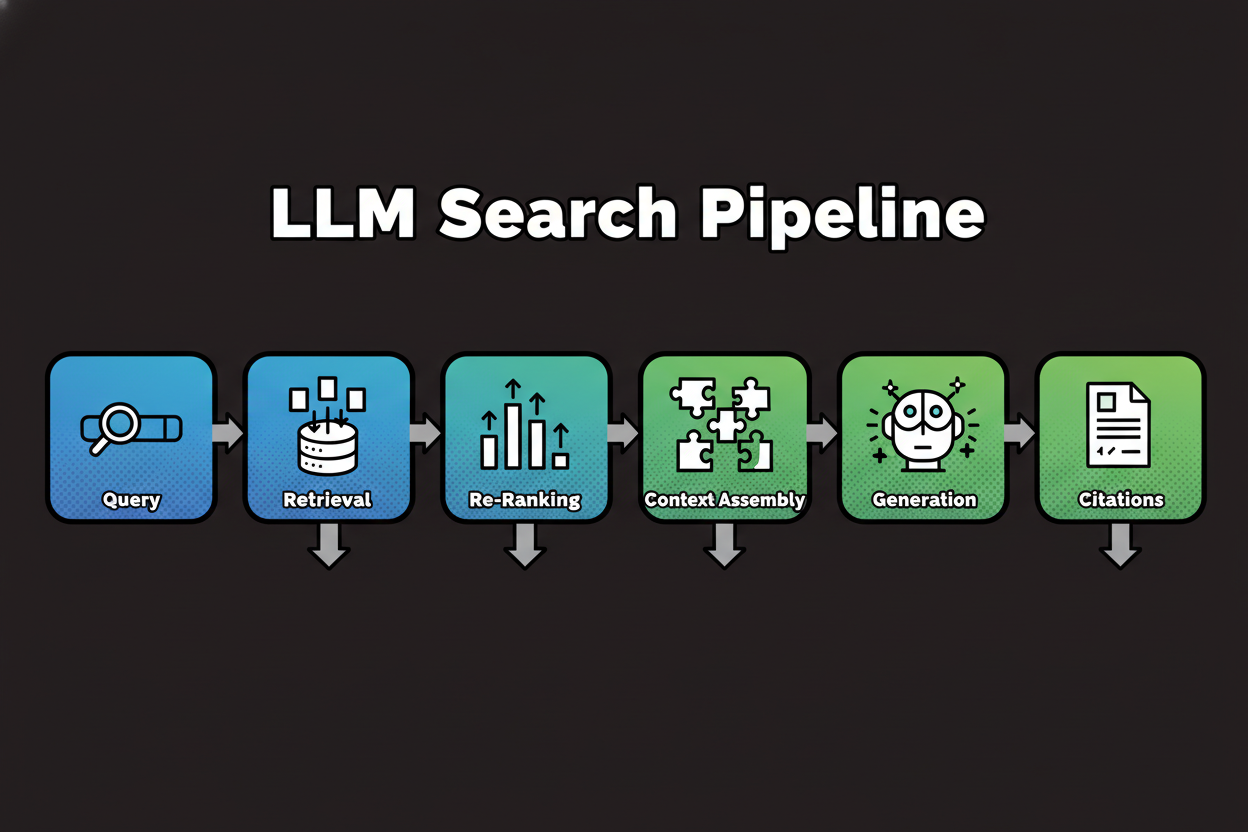

Under the hood, most ai search engines use a retrieval‑augmented generation pipeline. Here’s the simple version.

- Query parsing and reformulation. The system detects intent, builds semantic embeddings, and may expand the query to improve recall.

- Hybrid retrieval. It searches both keywords and vectors to find relevant passages across the web or a private index.

- Re-ranking. A stronger model re-sorts candidates to promote the most on-point chunks.

- Context assembly. The top passages (often a small set) are stitched with your query to form the LLM’s context window.

- Grounded generation. The model writes an answer based on that context, aiming for factual alignment.

- Citations. Sources that grounded key claims get cited so users can check the evidence.

Answer engines differ in how they cite and what they favor. Google AI Overviews composes a summary, then links to sources that support its points. Perplexity takes a citation-first approach, where every answer presents source links transparently.

This is not old-school rank-and-click. It’s retrieve, ground, synthesize. Instead of ranking pages broadly, these systems retrieve passages narrowly and build a single answer.

That shift changes what you optimize. You are no longer aiming only for position on a SERP. You are aiming for answer inclusion and citation presence, which is a different game.

Implications for llm search optimization are big:

-

The unit of competition is the passage, not the whole page.

-

Clear, well-titled chunks improve retrieval and re-ranking.

-

Entities and relationships help the engine disambiguate who and what you are.

-

Authority matters, because the engine wants to cite trustworthy sources. First steps:

-

Pick 5 pages you want cited in AI answers.

-

Break each into short, titled sections with crisp definitions.

-

Add a Q&A block that mirrors conversational follow-ups.

Core Principles of AI SEO Optimization for LLM Search

Great AI visibility starts with how your content is written and modeled. Four pillars drive answer engine optimization.

Clarity and factuality for AI comprehension

LLMs favor clarity. Short paragraphs, strong headings, and explicit definitions help retrieval and synthesis. Include specific facts, numbers, and claims the model can quote. Tie them to sources the engine can trust. This is how you become quotable.

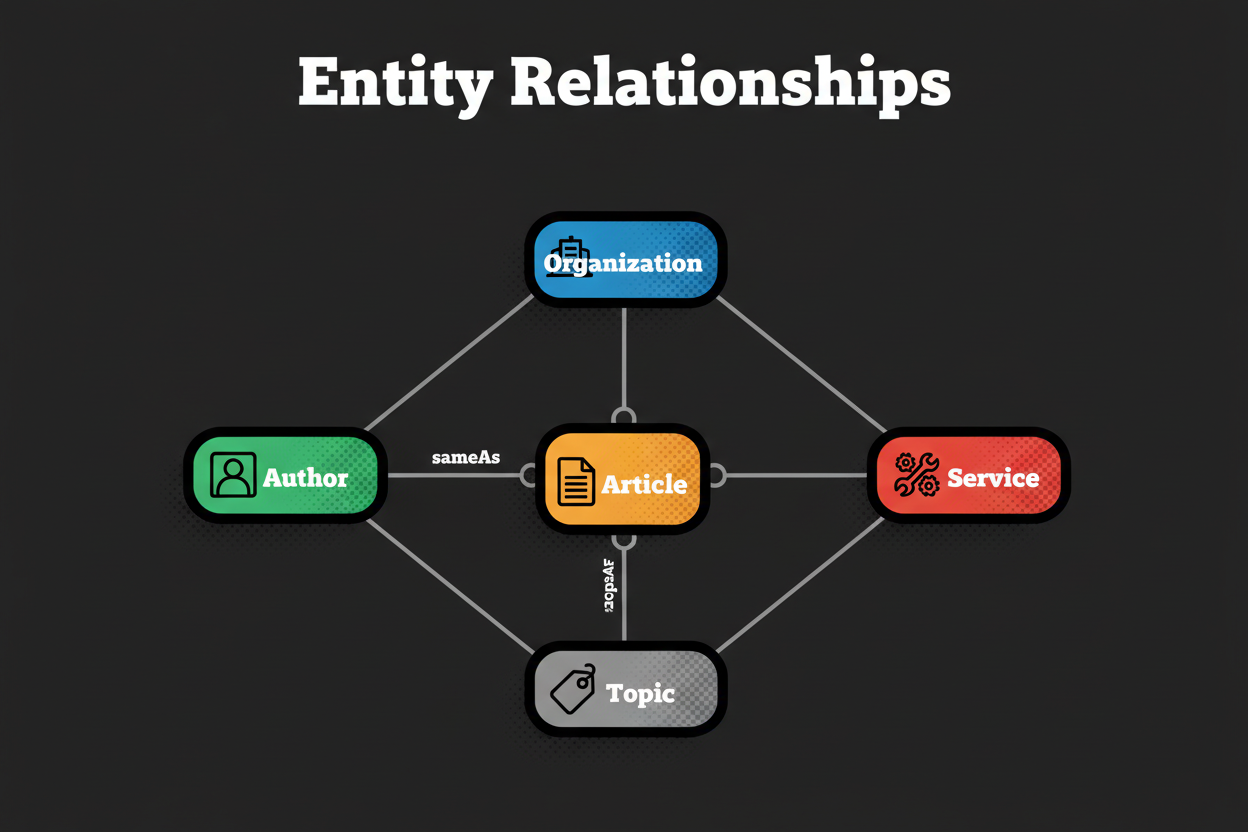

Entity optimization and semantic relationships

AI reads the web as entities and edges, not just strings of text. Create an “entity home” for your brand, products, services, and authors. Use consistent names and connect them via internal links and schema. Link to authoritative profiles with sameAs so the engine knows which “you” is you.

A small change here can move the needle. An FAQ hub that added a brand entity home, author pages, and consistent sameAs links started appearing as a cited source in AI answers for non-branded queries. The win came from disambiguation and clear topical fit.

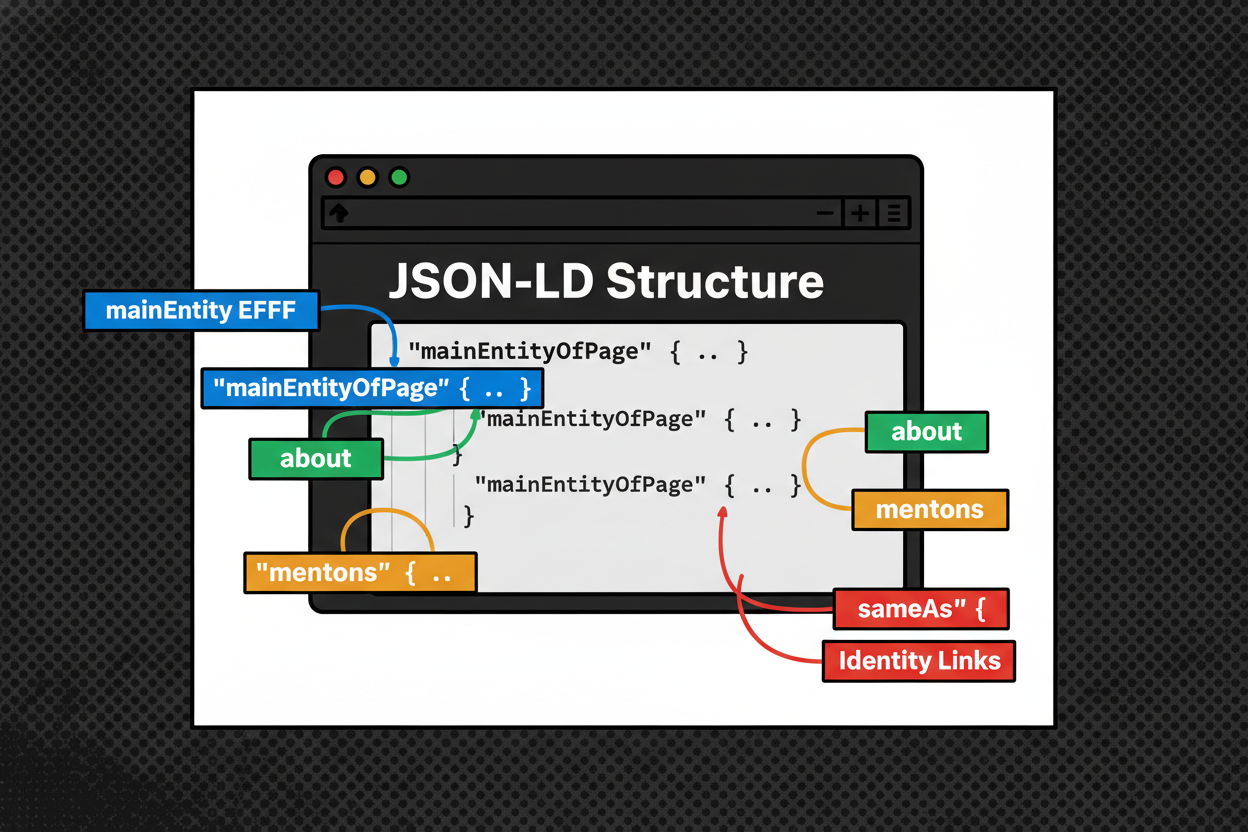

Structured data and machine-readability

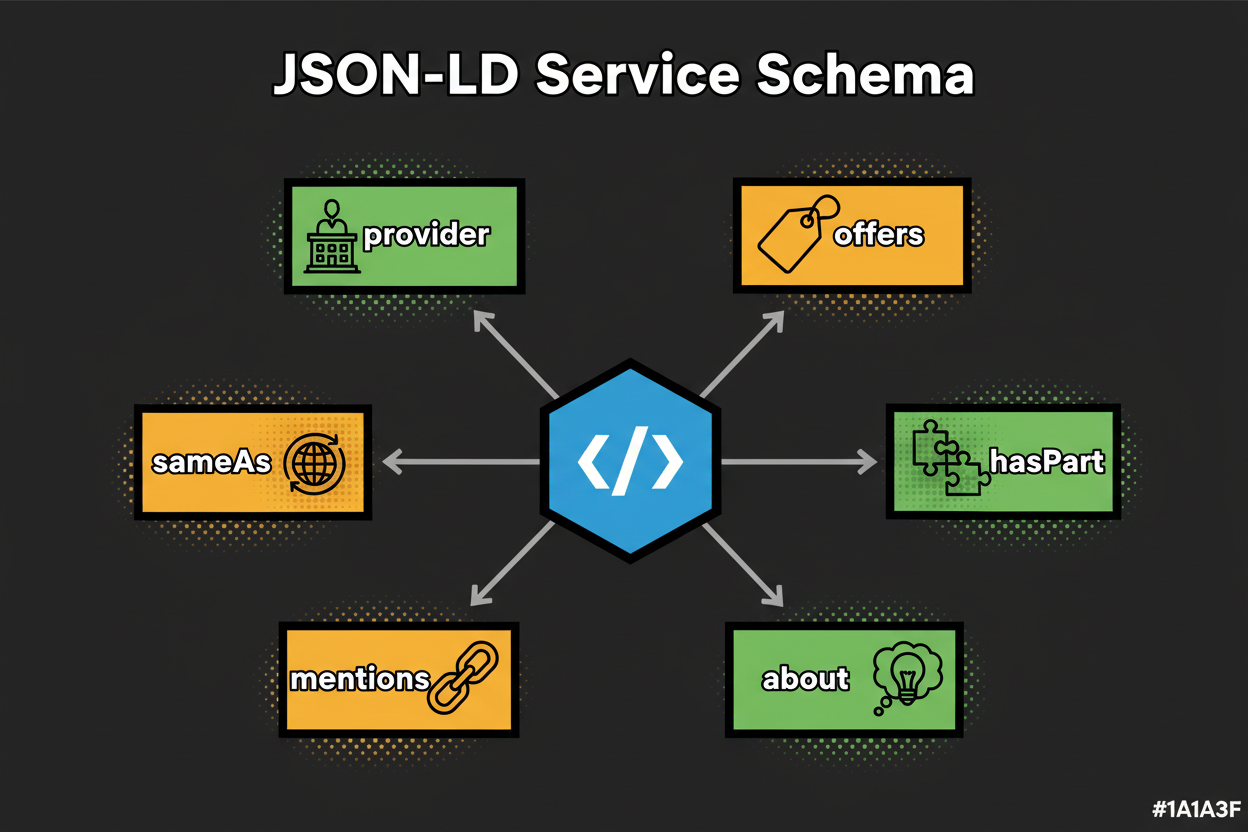

Schema is your machine-to-machine handshake. Use Article, Organization, Person, Service, Product, FAQPage, and HowTo where relevant. Populate mainEntityOfPage, about, mentions, and sameAs to make relationships explicit. Keep your schema consistent across the site.

Brand and author authority signals

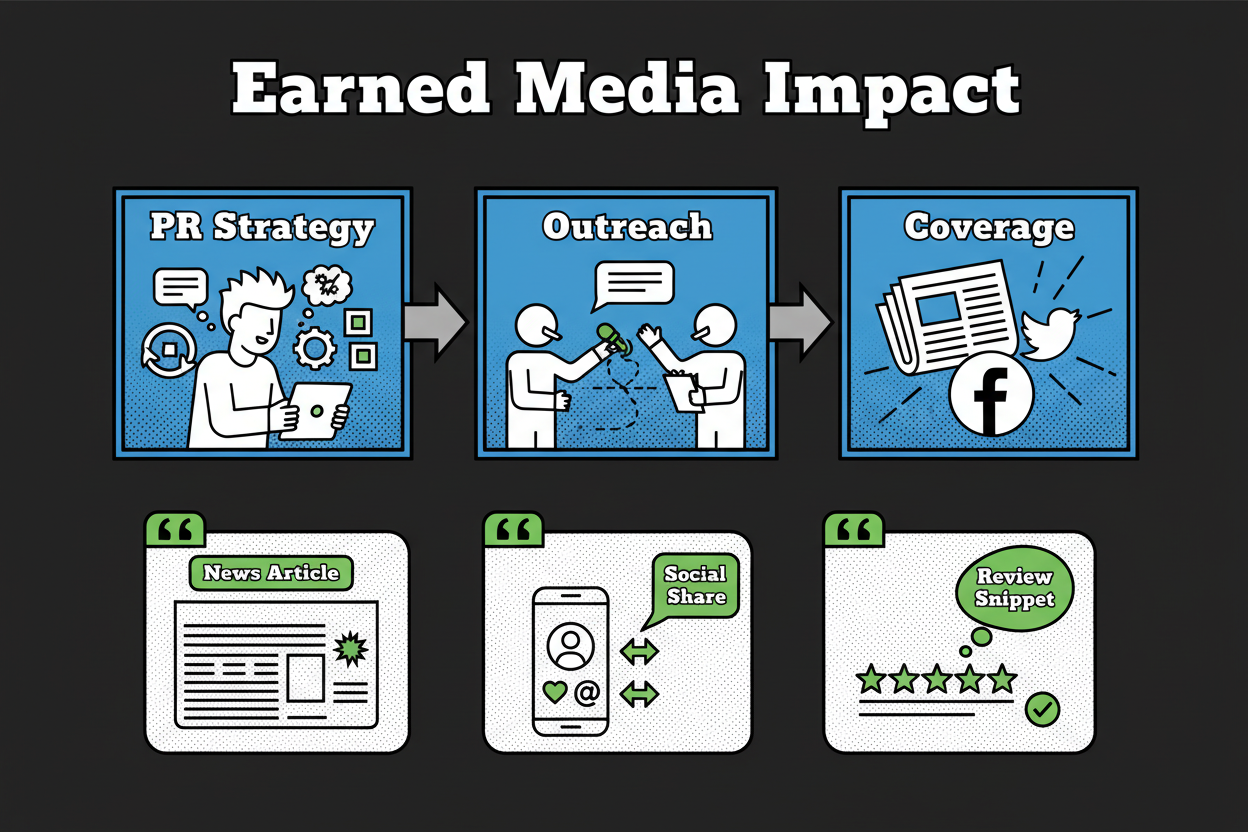

Authority is still currency. The difference is that ai search engines show their homework. Citations tilt to credible, well-cited brands and authors. Earn mentions and links through digital PR, publish data-backed insights, and keep author profiles consistent across platforms. These signals help you win re-ranking and citation slots.

All four pillars feed a simple goal: make it easy for retrieval to find you, for the model to understand you, and for the answer to cite you.

First steps:

- Publish an entity home for your brand and each key author.

- Add sameAs links to trusted profiles and directories.

- Implement Article and FAQPage schema on one flagship guide.

Actionable Framework: Step-by-Step LLM Search Optimization

You want a practical system you can run weekly. Here it is.

Start with an AI-readiness audit. Review your top pages and ask two questions: can a model quickly extract the key facts, and are your entities unambiguous? If not, you’ll fix that first.

Next, implement entity and schema foundations. Give each core entity an “entity home” and wire it up with Organization, Person, Service or Product, and Article markup. Use about, mentions, sameAs, and mainEntityOfPage to make relationships explicit.

Then shape content around conversational intent. Add Q&A sections, tight definitions, and short summaries that pull their weight. This helps hybrid retrieval and makes your passages quotable.

Finally, build authority. Earn brand mentions and citations through digital PR, expert contributions, and partnerships. Models prefer to cite trusted sources.

Quick Answer: How do you optimize for LLM search?

- Structure content into clear, titled chunks with concise definitions and citations.

- Map and disambiguate entities with schema (Organization, Person, Product/Service) and sameAs links.

- Implement Article/FAQPage JSON-LD and connect about and mentions to related entities.

- Cover conversational intents with on-page Q&A and short summaries for scannability.

- Earn authoritative brand mentions and links through digital PR and expert contributions.

- Publish and refresh fact-rich updates to stay eligible for retrieval and re-ranking.

- Monitor AI citations and answer inclusion, then refine content and schema.

- Repeat for each high-intent page and topic cluster. Now, zoom into the phases so you can execute with confidence.

Phase 1 - AI-readiness content audit

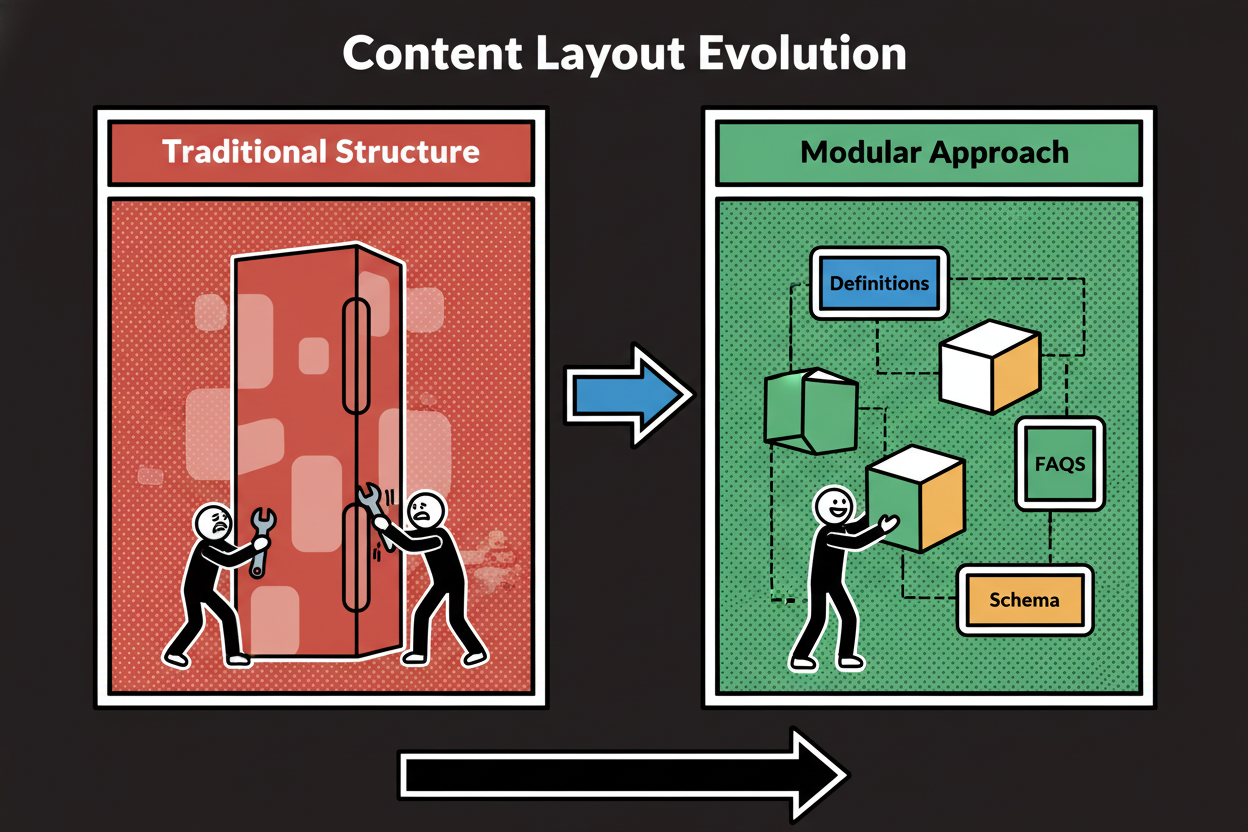

Inventory your top 10 to 20 pages. Check headings, chunking, and factual density. Add crisp definitions where models can lift them. Tighten any bloated sections into scannable, titled blocks.

Phase 2 - Entity and schema implementation

Create entity homes for your brand and authors. Implement JSON-LD for Organization, Person, Article, and Service or Product. Link out with sameAs to authoritative profiles. Use about and mentions to connect pages to the entities they discuss.

Phase 3 - Optimize for conversational and intent-based queries

Map likely follow-ups for each page. Add Q&A blocks, TL;DR summaries, and small glossaries. This aligns with how ai search engines reformulate and expand queries.

Phase 4 - Reputation and authority building

Publish data-backed assets, contribute expert quotes, and pitch stories to relevant media. These brand mentions and citations make you a safer choice for answer inclusion.

Phase 5 - Measurement and iteration

Track AI citations, answer inclusion, and unlinked brand mentions. Update content and schema based on what gets cited. Treat llm search optimization as an ongoing program, not a one-off project.

Technical Tactics: On-Page and Off-Page AI SEO for LLM Search

On-page, your goal is machine readability. Use predictable formatting: H2 and H3 headings, short paragraphs, and bullet summaries where they clarify facts. Add glossaries, definitions, and FAQs so engines can grab precise passages.

Entity linking is non-negotiable. Internally, link entity homes to relevant guides and services. Externally, cite authoritative sources. This creates a clean signal path for re-ranking and groundedness.

Layer your schema. Article or BlogPosting for guides, plus Organization and Person sitewide. Add Service or Product where relevant. Use about and mentions to connect related entities. Keep sameAs links consistent across your profiles and directories.

Off-page, invest in digital PR. Earn brand mentions, list inclusions, and expert placements in reputable publications. Clean up your knowledge graph footprint across Wikipedia or Wikidata where appropriate, social profiles, and major directories. These signals make you a credible citation target.

Agentic AI and the Future of Search: Trends and Opportunities

Agentic AI goes beyond single-turn answers. Agents plan, browse, compare, and take steps toward a goal. Think of an assistant that checks reviews, validates specs, compares prices, and then suggests a shortlist with reasons.

That shift changes what content wins. Agents prefer sources with structured specs, constraints, and clear steps. If your service pages lack pricing context, availability, and eligibility rules, you’ll get skipped when an agent builds an actionable plan.

So design for orchestration. Add decision criteria, comparison-ready specs, and well-formed actions. Use schema to expose offers, FAQs, and contact or booking actions. The more your page looks like a complete module, the easier it is for agents to use.

Picture an agent helping a user shortlist vendors for AI SEO. It retrieves entity-rich guides, validates credibility via citations and brand mentions, compares service scope and constraints, and recommends two providers with a call to action. Your inclusion depends on factual clarity, entity disambiguation, and action-ready data.

Practical Examples: Real-World LLM SEO Optimization in Action

Example 1 - Entity-first overhaul improves answer inclusion

A marketing team reworked an FAQ hub using an entity-first approach. They created an entity home for the brand and authors, added Article and FAQPage schema, and connected about and mentions to concepts like knowledge graph and structured data. Results followed: the hub started appearing as a cited source in ai search engines for non-branded queries, and clicks rose alongside new AI citations. The win came from disambiguation and clean, fact-rich chunks.

Before, the content was long and vague with few headings. After, it used modular sections, concise definitions, Q&A blocks, inline citations, and consistent entity markup. LLMs could finally lift the right passages.

Example 2 - Digital PR drives citations and authority

A consumer brand launched a data-backed mini report and pitched it to trade publications. They earned authoritative mentions, a handful of high-quality links, and got listed on two industry resource pages. Within weeks, brand citations began showing in Perplexity answers for relevant questions, and AI Overviews started linking to the report as a source. Referral traffic and assisted conversions rose with it.

The takeaway: entity clarity plus earned authority is a reliable path to llm search optimization. You need both.

The Complete LLM SEO Optimization Checklist

Use this checklist to ship faster and stay consistent.

Content and information design

- Define entity homes for brand, products, and authors

- Rewrite pages into short, titled chunks with crisp definitions

- Add TL;DR summaries and on-page Q&A blocks

- Include data points and cite authoritative sources

Technical and schema

- Implement Article, Organization, Person, and Service/Product schema

- Connect entities with about, mentions, mainEntityOfPage, and sameAs

- Use absolute URLs and consistent @id references across pages

- Validate JSON-LD with Google and Schema.org tools

Authority and reputation

- Publish a data-backed asset to power digital PR

- Secure expert quotes and inclusion on industry lists

- Standardize entity details across profiles and directories

Monitoring and iteration

- Track AI citations and answer inclusion for target topics

- Log brand mentions in answer engines and the open web

- Refresh content and schema based on what gets cited

Conclusion: Future-Proof Your SEO for the Age of AI Search

AI answer engines select a few passages, synthesize an answer, and cite trusted sources. To be one of them, design for machines and people. Use entity clarity, structured data, and authority building to power AI SEO Optimization for LLM Search.

Start with a flagship page. Implement schema, add Q&A, and publish one PR-worthy asset. Measure AI citations and iterate until you see steady inclusion.

Key Takeaways

- Optimize for answer inclusion, not just rankings. Passage-level clarity wins citations.

- Entity optimization and schema are the foundation for disambiguation and retrieval.

- Modular content with definitions, Q&A, and citations improves ai search visibility.

- Digital PR and brand mentions are decisive for re-ranking and answer selection.

- Agentic AI favors action-ready pages with structured offers, specs, and steps.

- Measure AI citations, track brand mentions, and refine content and schema regularly.